K8S项目实践(03): 随机调度器

简介

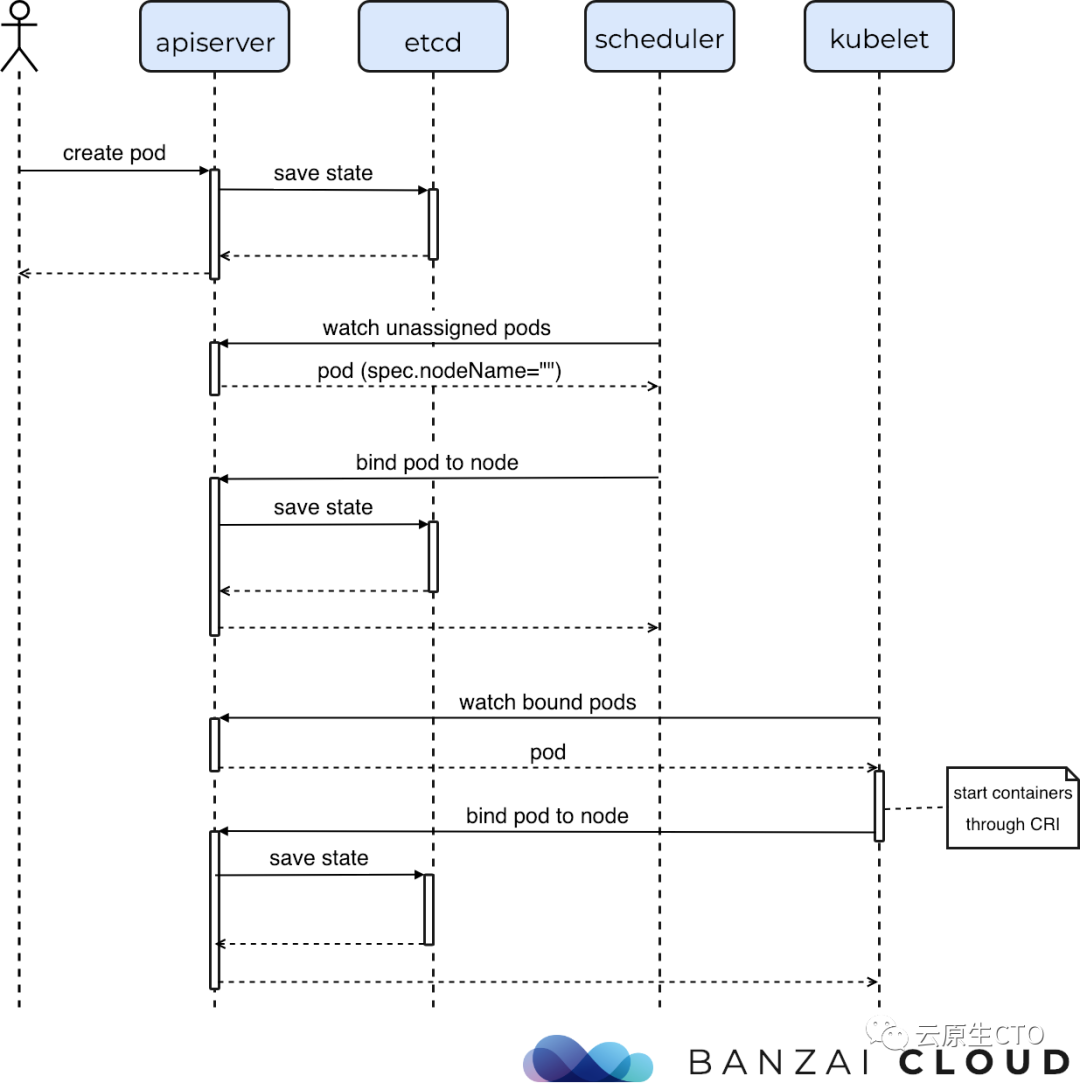

POD创建过程

1、 由kubectl解析创建pod的yaml,发送创建pod请求到APIServer。

2、 APIServer首先做权限认证,然后检查信息并把数据存储到ETCD里,创建deployment资源初始化。

3、 kube-controller通过list-watch机制,检查发现新的deployment,将资源加入到内部工作队列,检查到资源没有关联pod和replicaset,然后创建rs资源,rs controller监听到rs创建事件后再创建pod资源。

4、 scheduler 监听到pod创建事件,执行调度算法,将pod绑定到合适节点,然后告知APIServer更新pod的spec.nodeName

5、 kubelet 每隔一段时间通过其所在节点的NodeName向APIServer拉取绑定到它的pod清单,并更新本地缓存。

6、 kubelet发现新的pod属于自己,调用容器API来创建容器,并向APIService上报pod状态。

7、 Kub-proxy为新创建的pod注册动态DNS到CoreOS。为Service添加iptables/ipvs规则,用于服务发现和负载均衡。

8、 deploy controller对比pod的当前状态和期望来修正状态。

调度器介绍

从上述流程中,我们能大概清楚kube-scheduler的主要工作,负责整个k8s中pod选择和绑定node的工作,这个选择的过程就是应用调度策略,包括NodeAffinity、PodAffinity、节点资源筛选、调度优先级、公平调度等等,而绑定便就是将pod资源定义里的nodeName进行更新。

设计

kube-scheduler的设计有两个历史阶段版本:

- 基于谓词(predicate)和优先级(priority)的筛选。

- 基于调度框架的调度器,新版本已经把所有的旧的设计都改造成扩展点插件形式(1.19+)。

所谓的谓词和优先级都是对调度算法的分类,在scheduler里,谓词调度算法是来选择出一组能够绑定pod的node,而优先级算法则是在这群node中进行打分,得出一个最高分的node。

而调度框架的设计相比之前则更复杂一点,但确更加灵活和便于扩展,关于调度框架的设计细节可以查看官方文档——624-scheduling-framework,当然我也有一遍文章对其做了翻译还加了一些便于理解的补充——KEP: 624-scheduling-framework。总结来说调度框架的出现是为了解决以前webhooks扩展器的局限性,一个是扩展点只有:筛选、打分、抢占、绑定,而调度框架则在这之上又细分了11个扩展点;另一个则是通过http调用扩展进程的方式其实效率不高,调度框架的设计用的是静态编译的方式将扩展的程序代码和scheduler源码一起编译成新的scheduler,然后通过scheduler配置文件启用需要的插件,在进程内就能通过函数调用的方式执行插件。

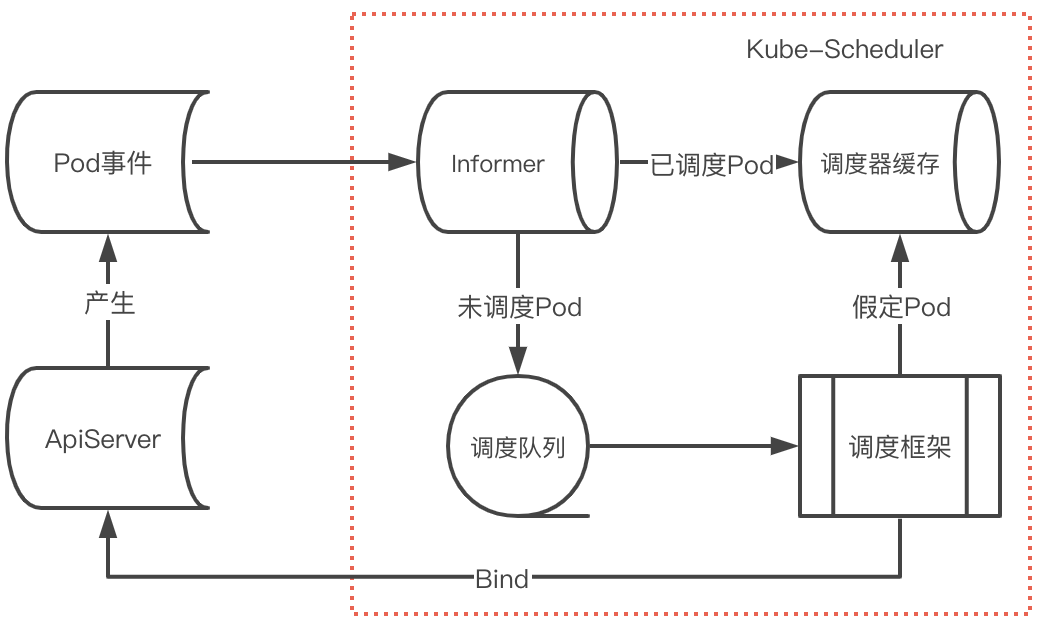

上面是一个简略版的调度器处理pod流程:

首先scheduler会启动一个client-go的Informer来监听Pod事件(不只Pod其实还有Node等资源变更事件),这时候注册的Informer回调事件会区分Pod是否已经被调度(spec.nodeName),已经调度过的Pod则只是更新调度器缓存,而未被调度的Pod会加入到调度队列,然后经过调度框架执行注册的插件,在绑定周期前会进行Pod的假定动作,从而更新调度器缓存中该Pod状态,最后在绑定周期执行完向ApiServer发起BindAPI,从而完成了一次调度过程。

实现

创建调度器

1、获取集群kubeconfig配置。

2、调用client-go生成clientset。

3、填充调度器相关参数, 包含获取node列表和关注的POD。

4、返回调度器

func NewScheduler(podQueue chan *v1.Pod, quit chan struct{}) Scheduler {

config, err := rest.InClusterConfig()

if err != nil {

log.Fatal(err)

}

clientset, err := kubernetes.NewForConfig(config)

if err != nil {

log.Fatal(err)

}

return Scheduler{

clientset: clientset,

podQueue: podQueue,

nodeLister: initInformers(clientset, podQueue, quit),

predicates: []predicateFunc{

randomPredicate,

},

priorities: []priorityFunc{

randomPriority,

},

}

}

运行调度器

不间断的从关注的POD列表中选出进行调度。

找合适节点

1、找到可用节点列表

2、给节点随机打100以内的分数

3、选择分数最高的节点

func (s *Scheduler) findFit(pod *v1.Pod) (string, error) {

nodes, err := s.nodeLister.List(labels.Everything())

if err != nil {

return "", err

}

filteredNodes := s.runPredicates(nodes, pod)

if len(filteredNodes) == 0 {

return "", errors.New("failed to find node that fits pod")

}

priorities := s.prioritize(filteredNodes, pod)

return s.findBestNode(priorities), nil

}

绑定POD

func (s *Scheduler) bindPod(ctx context.Context, p *v1.Pod, node string) error {

opts := metav1.CreateOptions{}

return s.clientset.CoreV1().Pods(p.Namespace).Bind(ctx, &v1.Binding{

ObjectMeta: metav1.ObjectMeta{

Name: p.Name,

Namespace: p.Namespace,

},

Target: v1.ObjectReference{

APIVersion: "v1",

Kind: "Node",

Name: node,

},

}, opts)

}

发送event事件

func (s *Scheduler) emitEvent(ctx context.Context, p *v1.Pod, message string) error {

timestamp := time.Now().UTC()

opts := metav1.CreateOptions{}

_, err := s.clientset.CoreV1().Events(p.Namespace).Create(ctx, &v1.Event{

Count: 1,

Message: message,

Reason: "Scheduled",

LastTimestamp: metav1.NewTime(timestamp),

FirstTimestamp: metav1.NewTime(timestamp),

Type: "Normal",

Source: v1.EventSource{

Component: schedulerName,

},

InvolvedObject: v1.ObjectReference{

Kind: "Pod",

Name: p.Name,

Namespace: p.Namespace,

UID: p.UID,

},

ObjectMeta: metav1.ObjectMeta{

GenerateName: p.Name + "-",

},

}, opts)

if err != nil {

return err

}

return nil

}

查看events信息可以看出random-scheduler打出的“laced pod [default/sleep-5b6fd9944c-5scxv] on k8s-master”等信息。”

27s Normal Scheduled pod/sleep-5b6fd9944c-5scxv Placed pod [default/sleep-5b6fd9944c-5scxv] on k8s-master

验证

编译

$ make docker-image

$ make docker-push

部署

$ kubectl apply -f rbac.yaml

$ kubectl apply -f deployment.yaml

$ kubectl apply -f sleep.yaml

把sleep.yaml里改为schedulerName: random-scheduler就可以使用该调度器。

[root@k8s-master deployment]# kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default httpbin-master 1/1 Running 2 (11h ago) 3d22h 10.244.0.36 k8s-master <none> <none>

default httpbin-worker 1/1 Running 2 (11h ago) 3d22h 10.244.2.15 k8s-work2 <none> <none>

default netshoot-master 1/1 Running 2 (11h ago) 3d22h 10.244.0.35 k8s-master <none> <none>

default netshoot-worker 1/1 Running 2 (11h ago) 3d22h 10.244.2.14 k8s-work2 <none> <none>

default random-scheduler-6dc78999cc-vnxzg 1/1 Running 0 9m 10.244.0.37 k8s-master <none> <none>

default sleep-5b6fd9944c-7rn5m 1/1 Running 0 11h 10.244.1.6 k8s-work1 <none> <none>

default sleep-5b6fd9944c-bwb9t 1/1 Running 0 11h 10.244.0.38 k8s-master <none> <none>

kube-system coredns-6d8c4cb4d-ck2x5 1/1 Running 20 (11h ago) 19d 10.244.0.34 k8s-master <none> <none>

kube-system coredns-6d8c4cb4d-mbctj 1/1 Running 20 (11h ago) 19d 10.244.0.33 k8s-master <none> <none>

kube-system etcd-k8s-master 1/1 Running 22 (11h ago) 19d 172.25.140.216 k8s-master <none> <none>

kube-system kube-apiserver-k8s-master 1/1 Running 24 (11h ago) 19d 172.25.140.216 k8s-master <none> <none>

kube-system kube-controller-manager-k8s-master 1/1 Running 22 (11h ago) 19d 172.25.140.216 k8s-master <none> <none>

kube-system kube-proxy-dnsjg 1/1 Running 21 (11h ago) 19d 172.25.140.215 k8s-work1 <none> <none>

kube-system kube-proxy-r84lg 1/1 Running 22 (11h ago) 19d 172.25.140.216 k8s-master <none> <none>

kube-system kube-proxy-tbkx2 1/1 Running 20 (11h ago) 19d 172.25.140.214 k8s-work2 <none> <none>

kube-system kube-scheduler-k8s-master 1/1 Running 22 (11h ago) 19d 172.25.140.216 k8s-master <none> <none>

kube-system minicni-node-2xq2d 1/1 Running 2 (11h ago) 3d23h 172.25.140.214 k8s-work2 <none> <none>

kube-system minicni-node-dsq8c 1/1 Running 2 (11h ago) 3d23h 172.25.140.216 k8s-master <none> <none>

kube-system minicni-node-h8hm8 1/1 Running 2 (11h ago) 3d23h 172.25.140.215 k8s-work1 <none> <none>

可以看出, random-scheduler-6dc78999cc-vnxzg 和 sleep pod已正常变成Running状态。

验证

[root@k8s-master deployment]# kubectl logs random-scheduler-6dc78999cc-vnxzg

I'm a scheduler!

2022/12/13 12:12:02 New Node Added to Store: k8s-master

2022/12/13 12:12:02 New Node Added to Store: k8s-work1

2022/12/13 12:12:02 New Node Added to Store: k8s-work2

found a pod to schedule: default / sleep-5b6fd9944c-bwb9t

2022/12/13 12:12:02 nodes that fit:

2022/12/13 12:12:02 k8s-master

2022/12/13 12:12:02 k8s-work1

2022/12/13 12:12:02 k8s-work2

2022/12/13 12:12:02 calculated priorities: map[k8s-master:79 k8s-work1:68 k8s-work2:15]

Placed pod [default/sleep-5b6fd9944c-bwb9t] on k8s-master

found a pod to schedule: default / sleep-5b6fd9944c-7rn5m

2022/12/13 12:12:02 nodes that fit:

2022/12/13 12:12:02 k8s-master

2022/12/13 12:12:02 k8s-work1

2022/12/13 12:12:02 calculated priorities: map[k8s-master:26 k8s-work1:50]

Placed pod [default/sleep-5b6fd9944c-7rn5m] on k8s-work1

[root@k8s-master deployment]# kubectl logs sleep-5b6fd9944c-7rn5m

[root@k8s-master deployment]#

从random-scheduler日志看, sleep容器经过random-scheduler进行调度的。

[root@k8s-master deployment]# k get events

- LAST SEEN TYPE REASON OBJECT MESSAGE

39m Normal Pulled pod/netshoot-master Container image "nicolaka/netshoot:latest" already present on machine

39m Normal Created pod/netshoot-master Created container centos

39m Normal Started pod/netshoot-master Started container centos

39m Normal Pulled pod/netshoot-worker Container image "nicolaka/netshoot:latest" already present on machine

39m Normal Created pod/netshoot-worker Created container centos

39m Normal Started pod/netshoot-worker Started container centos

27s Normal Scheduled pod/sleep-5b6fd9944c-5scxv Placed pod [default/sleep-5b6fd9944c-5scxv] on k8s-master

26s Normal Pulled pod/sleep-5b6fd9944c-5scxv Container image "tutum/curl" already present on machine

26s Normal Created pod/sleep-5b6fd9944c-5scxv Created container sleep

26s Normal Started pod/sleep-5b6fd9944c-5scxv Started container sleep

50s Normal Killing pod/sleep-5b6fd9944c-7rn5m Stopping container sleep

50s Normal Killing pod/sleep-5b6fd9944c-bwb9t Stopping container sleep

27s Normal Scheduled pod/sleep-5b6fd9944c-rccmj Placed pod [default/sleep-5b6fd9944c-rccmj] on k8s-work2

26s Normal Pulling pod/sleep-5b6fd9944c-rccmj Pulling image "tutum/curl"

27s Normal SuccessfulCreate replicaset/sleep-5b6fd9944c Created pod: sleep-5b6fd9944c-rccmj

27s Normal SuccessfulCreate replicaset/sleep-5b6fd9944c Created pod: sleep-5b6fd9944c-5scxv

27s Normal ScalingReplicaSet deployment/sleep Scaled up replica set sleep-5b6fd9944c to 2

[root@k8s-master deployment]#

查看events信息可以看出random-scheduler打出的“laced pod [default/sleep-5b6fd9944c-5scxv] on k8s-master”等信息。”

参考资料

[01] k8s调度器介绍(调度框架版本)

[02] client-go功能详解

[03] 一篇文章搞懂Go语言中的Context]

[04] 深入理解k8s中的informer机制]

作者:mospan

微信关注:墨斯潘園

本文出处:http://mospany.github.io/2022/12/11/k8s-random-scheduler/

文章版权归本人所有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接,否则保留追究法律责任的权利。